Proyectos Módulo de Ciencia de Datos¶

Responsables del curso¶

- Arturo Sánchez Pineda, Laboratoire d’Annecy de Physique des Particules (LAPP), France (previamente ICTP, Italia y CERN, Suiza).

- Juan Carlos Basto Pineda, Universidad Industrial de Santander, Colombia.

- José Ocariz, Université de Paris, Francia.

- Camila Rangel Smith, The Alan Turing Institute, Reino Unido.

- Javier Solano, Universidad Nacional de Ingeniería, Perú.

- Ernesto Medina, Yachay Tech, Ecuador.

A continuación se listan los proyectos finales del módulo de Ciencia de Datos. La mayoría pertenece a las dos filiales: Altas Energías y Sistemas Complejos, pero se han propuesto también algunos proyectos de otras temáticas. En cada uno de ellos se adjuntan recursos bibliográficos y una breve descripción.

Altas Energías¶

The general aim of the ATLAS Open Data and tools released is to provide a straightforward interface to replicate the procedures used by high-energy-physics researchers and enable users to experience the analysis of particle physics data in educational environments. Therefore, it is of significant interest to check the correct modelling of several SM process by the 13 TeV ATLAS Open Data MC simulation.

Several examples of physics analysis (as reported in official release document ATL-OREACH-PUB-2020-001) using the 13 TeV ATLAS Open Data inspired by and following as closely as possible the procedures and selections taken in already published ATLAS Collaboration physics results are introduced:

Take a look at the 12 physics analysis examples in: http://opendata.atlas.cern/release/2020/documentation/physics/intro.html

You can use one of them as starting point for your project! The idea is that we can optimise the analysis. * we can define better cuts, selections * add or refine a particular study into the selected analysis * Using Jupyter as the main framework to develop those analysis * All the analyses have dedicated references in the ATLAS Open Data documentation.

Any question? on MatterMost to: * Arturo Sánchez Pineda

More details:

- four high statistics analyses with a selection of:

- W-boson leptonic-decay events,

- single-Z-boson events, where the Z boson decays into an electron–positron or muon–antimuon pair,

- single-Z-boson events, where the Z boson decays into a tau-lepton pair with a hadronically decaying tau-lepton accompanied by a tau-lepton that decays leptonically,

-

top-quark pairs in the single-lepton final state. Each of these analyses have sufficiently high event yields to study the SM processes in detail, and are intended to show the general good agreement between the released 13 TeV data and MC prediction. They also enable the study of SM observables, such as the mass of the W and Z bosons, and that of the top quark.

-

three low statistics analyses with a selection of single top-quarks produced in the single-lepton t-channel, diboson WZ events produced in the tri-lepton final state and diboson ZZ events produced in the fully-leptonic final states. These analyses illustrate the statistical limitations of the released dataset given the low production cross-section of the rare processes, where the variations between data and MC prediction are attributed to sizeable statistical fluctuations.

-

three SM Higgs boson analyses with a selection of events in the H → WW, H → ZZ and H → γγ decay channels, which serve as examples to implement simplified analyses in different final-state scenarios and "re-discover" the production of the SM Higgs boson.

-

two BSM physics analyses searching for new hypothetical particles: one implementing the selection criteria of a search for direct production of superpartners of SM leptons, and the second one implementing the selection criteria of a search for new heavy particles that decay into top-quark pairs, provided to implement a simplified analysis for searching for new physics using different physics objects.

Some examples outside the analysis mentioned above:

AE13. Study of boosted Z→ ee using fat electrons¶

- Dataset: ATLAS data

- Objective: For the search of a heavy resonance that decays X → WZ → lvll, the acceptance×efficiency of the analysis decreases for electrons above signal mass ∼ 2 TeV. The loss occurs as electrons are boosted and get closer together and are removed by the usual electron isolation requirements. Different approaches to recover those events are currently under study.

Persona(s) de contacto:¶

AE14. Data driven backgrounds for same charge WW analysis¶

- Dataset: ATLAS data

- Objective: In the scattering of two W bosons of same charge an important background comes from other processes like ttbar or W+jets, when one of the jets is mis-identified as a lepton. The modelling in usual simulations is not accurate enough motivating more complex approaches to estimate this background from measured data.

Persona(s) de contacto:¶

AE15. Validation of Simulations in the scattering of two same charge W bosons¶

- Dataset: ATLAS data

- Objective: In the last year, new simulations became available which increase the theoretical accuracy for simulations of the scattering of two W bosons with the same charge. These new simulations can only be used, once properly validated.

Persona(s) de contacto:¶

AE16. Analysis of Higgs boson decays to two tau leptons using data and simulation of events at the CMS detector from 2012¶

- Dataset: CMS open data

- Objective: This analysis uses data and simulation of events at the CMS experiment from 2012 with the goal to study decays of a Higgs boson into two tau leptons in the final state of a muon lepton and a hadronically decayed tau lepton. The analysis follows loosely the setup of the official CMS analysis published in 2014.

Persona(s) de contacto:¶

AE17. Sample with tracker hit information for tracking algorithm ML studies TTbar_13TeV_PU50_PixelSeeds¶

- Dataset: CMS open data

- Objective: This dataset consists of a collection of pixel doublet seeds, i.e. the hit pairs that could belong to the same particle flying through the CMS Silicon Pixel Detector. These can be used in ML studies of particle tracking algorithms. Particle tracking is the process of clustering the recorded hits into groups of points arranged along an helix.

Persona(s) de contacto:¶

Sistemas Complejos¶

SC1. Simulation of the 2-D or 3-D Ising model, observation of the phase transition and estimation of some critical exponents (Monte Carlo)¶

- Description:Set up importance sampling Montecarlo scheme to compute the equilibrium state of an Ising model of a magnetic system accounting for boundary conditions and finite size scaling. Find critical exponents by collapsing data of different system sizes.

Persona(s) de contacto:¶

SC2. Calculate complex integrals by Monte Carlo (used in high energy physics)¶

- Description: As the calculation of integrals amounts to the computation of areas under a curve. In particle physics integrals associated with cross sections are multiple integrals that can be complicated analytically. Monte Carlo can address them in a very simple way.

Persona(s) de contacto:¶

SC3. Law of radioactive decay and decay chains derived from Monte-Carlo¶

- Description: Determine the history of a radioactive atom by a sequence of random numbers: Suppose that the first (m-1) successive random numbers are higher than 0.1 and that the m-th number is lower than 0.1, this sequence of ran- dom numbers is representative of the history of an atom which survives the time (m-1) and decays during the m-th time interval. Generalize this approach to the chain A -> B -> C.

Persona(s) de contacto:¶

SC4. Slowing down of fast neutrons (E< 1 MeV) (Monte Carlo)¶

- Description: Assume a homogeneous plate of thickness h bombarded perpendicularly by a flux of neutrons of energy . Neutrons penetrate and can scatter elastically or be absorbed. Scattering is assumed to be equally probable in all direction in a particular collision. Compute the probability of the neutron to traverse the plate, be absorbed or reflected.

Persona(s) de contacto:¶

SC5. Fluid Flow in a pipe: Poisseuille Law via Molecular Dynamics¶

- Description: Set up a molecular dynamics simulation in 2D inside a pipe with no slip country conditions at the pipe walls. After the transient behavior determine the flow profile and fit to Poisseuille flow. Determine the viscosity of the displaced fluid.

Persona(s) de contacto:¶

SC6. Hard sphere gas and irreversibility by Molecular Dynamics¶

- Description: molecular dynamics of molecules interacting via hard potentials must be solved in a way which is qualitatively different from the molecular dynamics of soft bodies. This is the highest precision equation of motion evolution. In this project it will serve to test irreversibility or how a gas forgets its initial conditions.

Persona(s) de contacto:¶

SC7. Dynamics of simple fluids and transport coefficients by Molecular dynamics¶

- Description: Molecular dynamics with phenomenological potentials using linear response to compute transport coefficients such as the diffusion constant, viscosity, thermal coefficient.

Persona(s) de contacto:¶

Suggested references¶

- Kroese D. P., Taimre T, and Botev Z. I. (2001) Handbook of Monte Carlo Methods, Wiley.

- Frenkel, D., & Smit, B. (2001). Understanding molecular simulation: from algorithms to applications (Vol. 1). Elsevier.

- Dill, K., & Bromberg, S. (2012). Molecular driving forces: statistical thermodynamics in biology, chemistry, physics, and nanoscience. Garland Science.

- Allen, M. P., & Tildesley, D. J. (2017). Computer simulation of liquids. Oxford university press.

- Haile, J. M. (1992). Molecular Dynamics Simulations, Elementary Methods, John-Wiley and Sons.

Otros proyectos¶

OP1. Scientific computing: design and deploy of physics data analysis pipelines with in-house and cloud computing.¶

- Dataset: LHC experiments Open Data at 8 and 13 TeV

- Objectives:

- Train the student to design data analysis in terms of the resources, the procedures, versioning and maintainability.

- Develop a culture of reproducibility and proper analysis development protocols.

- Get into the so-called "Big Data" by prototyping analysis using Open Data.

- Present the final products in a modern way, under the proper licensing and DOI identification.

Persona(s) de contacto:¶

Note: Highly recommended!!¶

OP2. Explorar indicadores que reflejen la incidencia de Covid-19 en Venezuela usando fuentes de datos no oficiales.¶

-

Descripción: En este proyecto intentaremos estimar la incidencia de casos de Covid19 en Venezuela usando datos alternativos no oficiales procedentes de la internet. Se explorarán diferentes fuentes de datos para determinar cuales son los mejores indicadores que reflejen la magnitud de la incidencia de casos de Covid19 en un momento dado en Venezuela. El producto final de este proyecto podrá ser publicado en Turing Data Stories, un proyecto que mezcla el periodismo ciudadano de datos y la cultura de software abierto. Para este proyecto seria útil que los estudiantes tuvieran un nivel medio de inglés, pero no es obligatorio.

-

Herramientas/métodos a utilizar y aprender: web scraping, visualización y comunicación con datos y análisis de series de tiempo.

- Datos a utilizar: Google trends, Twitter API, GoFundME, Just Giving, y otros más a explorar.

- Antecedentes:

- https://twitter.com/CamilaRangelS/status/1376286680584482817?s=20

- https://twitter.com/LuisCarlos/status/1379203638082613253?s=20

Persona(s) de contacto:¶

- Camila Rangel Smith.

- Harvey Maddocks (Co-supervisor, Cientfifico de Datos en Kennedys IQ, London).

OP3. ‘AI Commons’: A framework for collaboration to achieve global impact¶

- Dataset: Need to contact the community to get the datasets. These are datasets released in collaboration with the UN.

- Objective: A common knowledge hub to accelerate the world’s challenges with Artificial Intelligence

- Comments: it might be better to use these datasets for the AI course in the second semester. Here is the link: https://ai-commons.org

Persona(s) de contacto:¶

OP4. Data Analysis with images from NASA NOAA¶

- Dataset: NASA-NOAA

Persona(s) de contacto:¶

OP5. Data Analysis with images from ESA¶

- Dataset: ESA

Persona(s) de contacto:¶

OP6. Data Analysis with images from Mars¶

- Dataset: NASA - Mars 2020-2021

Persona(s) de contacto:¶

OP7. Data Analysis with Art images from The Louvre¶

- Dataset: Museo del Louvre

Persona(s) de contacto:¶

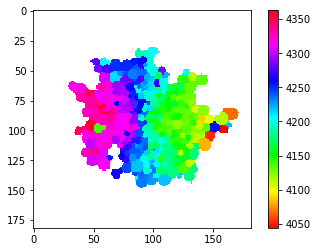

OP8. Scientific Data Analysis of Galaxy Velocity Maps¶

-

Description: Velocity maps of galaxies are a valuable tool for learning about the dynamical state and formation history of these systems. In this project, the students will build a professional tool for the analysis of this kind of data, which allows one to fit different models to the data using Monte Carlo, and estimating the best-model parameters and their uncertainties through X2 minimization and the analysis of likelihood contours. The students will receive support in good programming practices and object-oriented programming.

-

Sources:

-

Note: It is not expected for you to understand many details from the references at this point. Take a quick look on them and get in touch to hear details if you are interested.

Persona(s) de contacto:¶

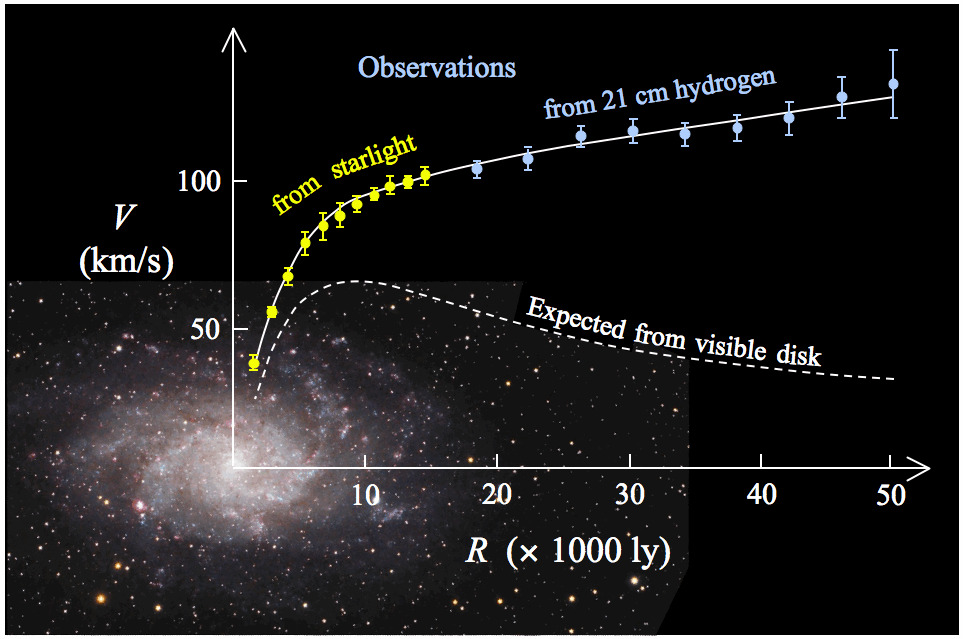

OP9. Dark Matter Inferences From Rotation Curves¶

-

Description: As dark matter can not be seen, one way to measure its distribution in galaxies is through the internal motions of their gas and stars. The goal of this project is to develop a modular software to study rotation curves of dwarf galaxies by fitting them with theoretical models for their dark matter distribution using Monte Carlo. This includes estimating the best-model parameters and their uncertainties through X2 minimization and the analysis of likelihood contours, as well as the cross-comparison of different models based on the goodness of the fit. The students will receive support in good programming practices and object-oriented programming.

-

Sources:

-

Note: It is not expected for you to understand many details from the references at this point. Take a quick look on them and get in touch to hear details if you are interested.

Persona(s) de contacto:¶

Opción libre¶

- ¿Tienes algún experimento en mente bien sea en el área de FAE o SC? Lo podemos discutir! Ten a la mano:

Dinámica de trabajo¶

- En pareja o grupos de tres.

- Tanto como sea posible, creen grupos con estudiantes de distintas universidades, mejor aún si son de diferentes países.

- Una vez que hayas decidido que proyecto quieres comunícanos el nombre de los integrantes de tu equipo y el proyecto escogido en el mattermost, canal "MD-1 Ingeniería de software para la investigación". Mattermost será nuestro canal de comunicación preferido.

- Escribe a la persona de contacto de tu proyecto para empezar (mantén en el loop a los responsables del curso).

Entrega¶

La entrega consiste de las siguientes partes:

- Reporte en pdf con el planteamiento del problema, estrategia de solución, análisis, resultados, etc. Es un documento autocontenido pero sin excesivos detalles técnicos. No incluye los códigos.

- Repositorio de gitlab (o github) conteniendo el reporte, todos los códigos muy bien estructurados y documentados, documentación adicional, etc. Debe tener un README.md muy claro y ser lo más autocontenido posible. Una posible excepción son los datos crudos en sí, según su volumen o naturaleza. Sin embargo en ese caso se debe indicar de dónde y cómo se obtienen, buscando permitir la reproducibilidad del estudio.

- Video. Que sustituye a una típica exposición de sustentación. Buscamos minimizar inconvenientes de conexión y conservar una galeria interesante de sus presentaciones, así que el video debe ser agradable y bien presentado además de presentar correctamente el contenido esencial. Tiempo máximo 15 minutos.

Calendario¶

- Semana 1 (Abril 05-11, 2021): Elección de proyecto y revisión bibliográfica

- Semana 2-5 (Abril 12-Mayo 09, 2021): Ejecución

- Semana 6 (Mayo 10-14, 2021): Presentación + entrega del reporte

- Mayo 10 (medianoche COL/PER/ECU; +1h VEN): Informar el link al repositorio, donde debe además reposar el reporte. Esto lo hacen directamente en el canal de mattermost dedicado a los proyectos.

- Mayo 12 (medianoche COL/PER/ECU; +1h VEN): Agregar el README del repositorio el link a su video en una plataforma online, garantizando que tengamos permiso para verlo

- Mayo 13: Ronda de sustentaciones